No edit summary |

(this should probably be only in ResultsDB_Legacy category) |

||

| (2 intermediate revisions by one other user not shown) | |||

| Line 1: | Line 1: | ||

= Database schema = | = Database schema = | ||

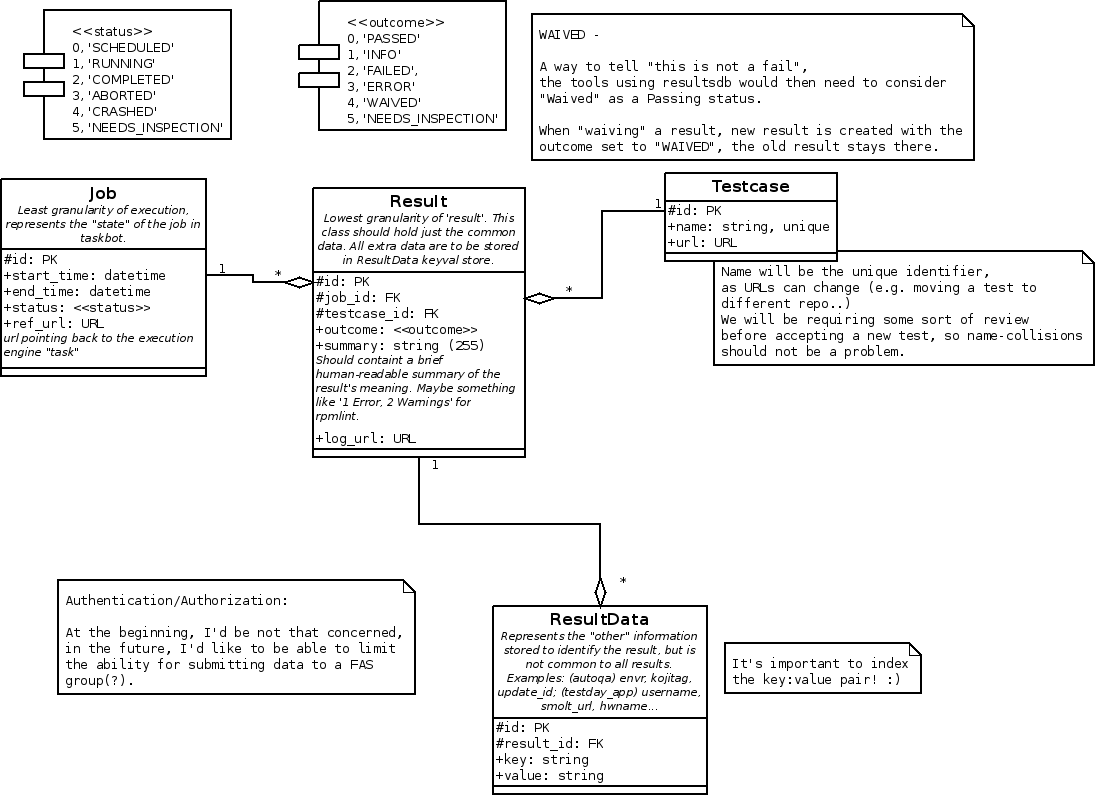

== Current WIP for Taskbot == | |||

[[Image:ResultsDB_v2.png]] | |||

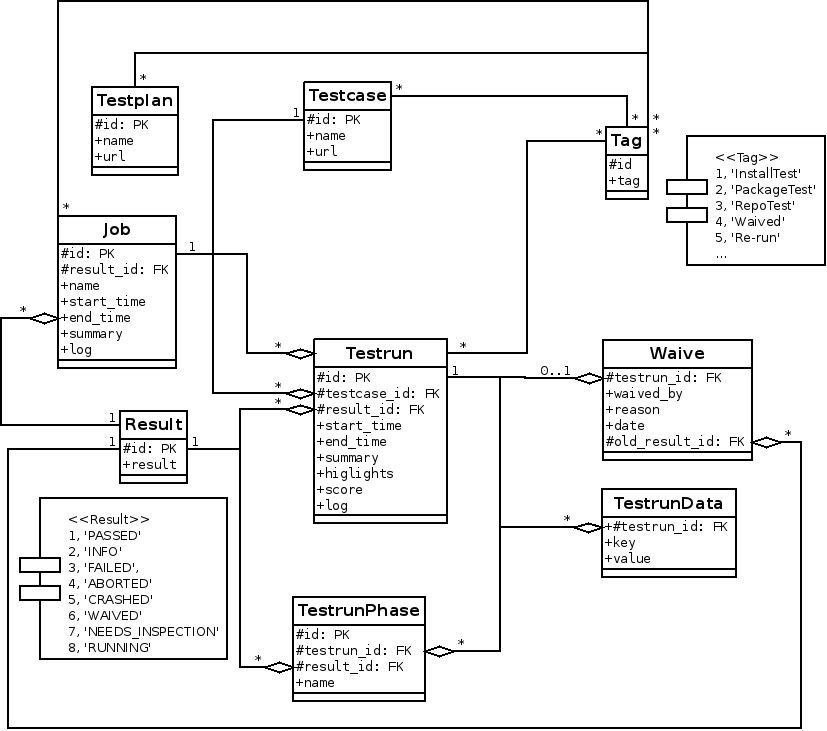

== Current AutoQA/Production Schema == | |||

[[Image:Autoqa_resultdb_dbschema_4.png]] | [[Image:Autoqa_resultdb_dbschema_4.png]] | ||

| Line 63: | Line 73: | ||

** detailed info - detailed state of the testcases (passed/failed/running, detailed phases [if described on the wiki page]) | ** detailed info - detailed state of the testcases (passed/failed/running, detailed phases [if described on the wiki page]) | ||

[[Category: | [[Category:ResultsDB_Legacy]] | ||

Latest revision as of 14:49, 7 May 2020

Database schema

Current WIP for Taskbot

Current AutoQA/Production Schema

The primary goal when designing the database schema was versatility. The desire was to have the ability to store test data from yet-to-be-created tests in addition to meeting the needs of 'other activities' that may become relevant in the future.

Testrun Table

The Testrun table corresponds with a single execution of a test. This table only stores the most common information, common to all current and future tests.

TestrunData Table

Any data not covered by attributes in Testrun (such as tested package/update, architecture, etc) will be stored in the TestrunData table as key-value pairs. The potential and expected keys for these key-value pairs will be defined by test metadata. It is possible to include information about 'always present' and 'sometimes present' keys [1].

TestrunPhase Table

Testruns can be divided into phases (e.g. Setup phase of the test, downloading packages from koji) which are stored in the TestrunPhase table. If the test developer splits the test into multiple phases, each phase has its own result in addition to the global result stored in the Testrun table. Once tests are split up into phases, it will be possible to use test metadata to determine the severity of failures (e.g. a failure during the setup phase is not as severe as a failure during the test phase).

Job Table

Expected default key-values for basic test classes

Installation tests

- release_name

- compose_date

- arch

Repository tests

- repo_name

- compose_date

- arch

Package tests

- pkg_name

- envr

- arch

Recommended:

- koji_tag

Specific keyval examples - tests using Bodhi hook:

- bodhi_tag/request -- to mark in which state in Bodhi the package was

Specific keyval examples - comparative tests:

- counterpart_name

- counterpart_envr

- counterpart_koji_tag

- counterpart_bodhi_tag/request, ...

Next Steps

Package Update Acceptance Test Plan Frontend

- Create simple frontend like: https://fedoraproject.org/wiki/File:Package_acceptance_dashboard.png

- where do i get recent builds?

- progress bar:

- how many tests there are for the testplan? - testplan wiki page

- what is the progress? - resultsdb - how to get 'the right' results? is 'take the latests tests for

envra' way ok? Jobs in autoqa?

- detailed info - detailed state of the testcases (passed/failed/running, detailed phases [if described on the wiki page])