From Fedora Project Wiki

No edit summary |

No edit summary |

||

| (24 intermediate revisions by 3 users not shown) | |||

| Line 6: | Line 6: | ||

== Use cases for the AutoQA resultsdb == | == Use cases for the AutoQA resultsdb == | ||

=== | '''Some of these use cases are not specific to resultdb, they should be moved to [[AutoQA Use Cases]].''' | ||

=== Package maintainer === | |||

* Michael wants to push a new update for his package. | |||

** Michael just submits his package update to Fedora Update System and it takes care of the rest. Michael is informed if there is some problem with the update. | |||

: '''-> move to general use cases''' | |||

* Michael wants to know how the AutoQA tests are progressing on his update. | |||

** Michael inspects the progress of AutoQA test in a web front-end. Michael can easily see if the tests are waiting for an available hardware, or they have already started, or they have already all finished. Michael sees the results of already completed tests continuously and may explore them even though all the tests have not finished yet. | |||

: '''-> We have to have the notion how many test cases are in the test plan (or even test phases in the test case?). That is kind of TCMS stuff.''' | |||

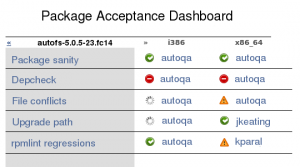

:: [[Image:package_acceptance_detail.png|300px]] | |||

* Michael wants to know if there is something he could do to improve the quality of his package. | |||

** Michael observes AutoQA results for recent updates of his package in a web front-end. He goes through the output of advisory tests and also through the output of other tests that is marked as "attention recommended". | |||

: '''-> Test result table must contain another field 'emphasized output' (propose better name - 'highlights'?(jskladan)), that will contain important alerts and notices suggested (by the test run) for reviewers to see.''' | |||

* Michael was warned that his package update failed automated QA testing and wants to see the cause. | |||

** Michael inspects AutoQA results for the last update of his package in a web front-end. He goes through the mandatory and introspection tests output and reads the summary. If additional information are needed, he opens the full tests' logs. | |||

: '''-> Test results must contain summary and link to full logs.''' | |||

:: [[Image:package_acceptance_detail.png|300px]] | |||

* Michael wants to waive errors related to his package update, because he's sure the errors are false. | |||

** Michael logs with his Fedora account to a AutoQA web front-end to prove he has privileges to waive errors on a particular package. He inspects the errors on his package update and waives them if they are false. | |||

: '''-> The database must support waiving mechanism.''' | |||

* Michael wants to report a false error from one of AutoQA test cases and ask AutoQA maintainers for a fix. | |||

** Michael follows instructions on [[AutoQA]] wiki page (or web front-end) and uses a mailing list or bug tracker to report his problem. | |||

: '''-> move to general use cases''' | |||

=== QA member === | |||

* Caroline knows that some recent tests failed because of hardware problems with test clients. She wants to re-run the tests again. | |||

** Caroline logs in into the AutoQA web front-end, selects desired tests and instructs AutoQA to run them again. | |||

: '''-> Re-running is a matter of the harness, but the database could support flagging certain test results as re-run.''' | |||

* Caroline declares a war on LSB-incompliant initscripts. She wants to have all packages tested for this. | |||

** Caroline uses CLI to query AutoQA Results DB for results of 'Initscripts check' test for all recently updated packages. If she is not satisfied, she will instruct AutoQA to run this test on all available packages. '''<<FIXME - how?>>''' | |||

: '''-> ResultDB must support queries for most common cases (according to time, test class, test name, tag, etc.). Second sentence is a problem of harness.''' | |||

* Caroline must ensure that everything works at a certain milestone (date). | |||

** Caroline uses the AutoQA web front-end and searches for relevant results (installation, package update) related to a given date. She examines the results and decides whether everything is fine or not. | |||

=== Developer === | |||

* Nathan is curious about possible ways to make his program better. | |||

** Nathan checks the web front-end of AutoQA and displays a list of tests that were performed on his program recently. He checks the tests' descriptions and chooses those tests which are related to the program itself, not to packaging. He inspects the results of these tests and looks for errors, warnings or other comments on program's (wrong) functionality. Some serious errors should have been probably already reported to Nathan by corresponding package maintainer, but there may be other less-serious issues that were not. | |||

* Kate is heavily interested in Rawhide composition. She wants to be every day notified if composing of Rawhide succeeded or failed, eventually why. | |||

** Kate creates a script that remotely queries AutoQA Results DB and asks for current Rawhide compose testing status. She runs this script every day. In case Rawhide composition fails, the script notifies her and also presents her with a link leading to a AutoQA web front-end with more detailed results of the whole test. | |||

: '''-> Notification support should be part of the TCMS or we should use some message bus.''' | |||

:: [[Image:irb.png|200px]] | |||

=== AutoQA test developer === | |||

* Brian developed a new test, which is now deployed to the AutoQA test instance. Brian wants to receive notifications of all the results of his test to be able to track if everything works well. | |||

** Option 1: Brian needs to write a script that will query periodically ResultDB and notify him about new results. | |||

** Option 2: AutoQA must provide an option how to define which events you are interested in listening to. | |||

** Option 3: AutoQA must provide some interesting events exports (like web syndication feeds) that would exactly suit Brian's needs. | |||

: '''-> Again not part of resultDB, message bus is best solution.''' | |||

* Brian needs to be notified about all tests which were scheduled to run in AutoQA but that have not finished successfully (test crashed, AutoQA crashed, operating system crashed, host hardware crashed). | |||

** '''<<FIXME - how?>>> | |||

: '''-> Not problem of ResultDB. But we must support some result like CRASHED to indicate the job has not been completed neither it will be.''' | |||

=== Fedora tools === | |||

* Fedora Update System needs to know what the current status of AutoQA tests is for a particular ENVR. | |||

** Fedora Update Systems asks ResultsDB about a particular ENVR and receives an answer whether the tests were already executed or not and eventually their result. | |||

* ResultsDB front-ends need to display not only results to individual packages, but also overview of results summaries ('Show me all failed tests for the last week'). | |||

* Fedora Update System wants to have relevant people notified when the status of AutoQA tests changes for a particular update. | |||

** Option 1 - Fedora Update System sends the notices: AutoQA must support registering a listener. Fedora Update System registers a listener for a particular update and AutoQA notifies it for every change on that update (this is particularly needed for subsequent changes, like UNTESTED → NEEDS_INSPECTION → WAIVED). | |||

** Option 2 - AutoQA sends the notices: AutoQA has hardcoded in the tests whom to send noticies. | |||

** Option 3 - AutoQA sends the notices: Fedora Update System supports querying for the recipients of the notices. AutoQA query Fedora Update System for the recipients and sends them a notice. | |||

** Option 4 - Fedora Notification Bus: All update result changes are sent to the common Notification Bus and anybody can catch those notifications and do anything he wants with it. This is in a far future. | |||

: '''-> Notification bus.''' | |||

=== General public === | |||

* Karen is just interested how automated testing works and what are the recent results. | |||

** Karen opens up an AutoQA web front-end and browses through the results. | |||

:: [[Image:package_acceptance_dashboard.png|200px]] | |||

[[Category:ResultsDB_Legacy]] | |||

[[Category:AutoQA]] | |||

Latest revision as of 13:02, 22 January 2014

Task: [kparal + jskladan] - Define personas and write use cases

Example: No_frozen_rawhide_announce_plan#Use_Cases

Use cases for the AutoQA resultsdb

Some of these use cases are not specific to resultdb, they should be moved to AutoQA Use Cases.

Package maintainer

- Michael wants to push a new update for his package.

- Michael just submits his package update to Fedora Update System and it takes care of the rest. Michael is informed if there is some problem with the update.

- -> move to general use cases

- Michael wants to know how the AutoQA tests are progressing on his update.

- Michael inspects the progress of AutoQA test in a web front-end. Michael can easily see if the tests are waiting for an available hardware, or they have already started, or they have already all finished. Michael sees the results of already completed tests continuously and may explore them even though all the tests have not finished yet.

- -> We have to have the notion how many test cases are in the test plan (or even test phases in the test case?). That is kind of TCMS stuff.

- Michael wants to know if there is something he could do to improve the quality of his package.

- Michael observes AutoQA results for recent updates of his package in a web front-end. He goes through the output of advisory tests and also through the output of other tests that is marked as "attention recommended".

- -> Test result table must contain another field 'emphasized output' (propose better name - 'highlights'?(jskladan)), that will contain important alerts and notices suggested (by the test run) for reviewers to see.

- Michael was warned that his package update failed automated QA testing and wants to see the cause.

- Michael inspects AutoQA results for the last update of his package in a web front-end. He goes through the mandatory and introspection tests output and reads the summary. If additional information are needed, he opens the full tests' logs.

- Michael wants to waive errors related to his package update, because he's sure the errors are false.

- Michael logs with his Fedora account to a AutoQA web front-end to prove he has privileges to waive errors on a particular package. He inspects the errors on his package update and waives them if they are false.

- -> The database must support waiving mechanism.

- Michael wants to report a false error from one of AutoQA test cases and ask AutoQA maintainers for a fix.

- Michael follows instructions on AutoQA wiki page (or web front-end) and uses a mailing list or bug tracker to report his problem.

- -> move to general use cases

QA member

- Caroline knows that some recent tests failed because of hardware problems with test clients. She wants to re-run the tests again.

- Caroline logs in into the AutoQA web front-end, selects desired tests and instructs AutoQA to run them again.

- -> Re-running is a matter of the harness, but the database could support flagging certain test results as re-run.

- Caroline declares a war on LSB-incompliant initscripts. She wants to have all packages tested for this.

- Caroline uses CLI to query AutoQA Results DB for results of 'Initscripts check' test for all recently updated packages. If she is not satisfied, she will instruct AutoQA to run this test on all available packages. <<FIXME - how?>>

- -> ResultDB must support queries for most common cases (according to time, test class, test name, tag, etc.). Second sentence is a problem of harness.

- Caroline must ensure that everything works at a certain milestone (date).

- Caroline uses the AutoQA web front-end and searches for relevant results (installation, package update) related to a given date. She examines the results and decides whether everything is fine or not.

Developer

- Nathan is curious about possible ways to make his program better.

- Nathan checks the web front-end of AutoQA and displays a list of tests that were performed on his program recently. He checks the tests' descriptions and chooses those tests which are related to the program itself, not to packaging. He inspects the results of these tests and looks for errors, warnings or other comments on program's (wrong) functionality. Some serious errors should have been probably already reported to Nathan by corresponding package maintainer, but there may be other less-serious issues that were not.

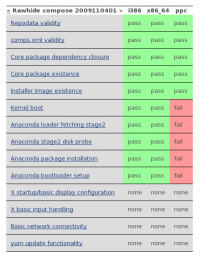

- Kate is heavily interested in Rawhide composition. She wants to be every day notified if composing of Rawhide succeeded or failed, eventually why.

- Kate creates a script that remotely queries AutoQA Results DB and asks for current Rawhide compose testing status. She runs this script every day. In case Rawhide composition fails, the script notifies her and also presents her with a link leading to a AutoQA web front-end with more detailed results of the whole test.

AutoQA test developer

- Brian developed a new test, which is now deployed to the AutoQA test instance. Brian wants to receive notifications of all the results of his test to be able to track if everything works well.

- Option 1: Brian needs to write a script that will query periodically ResultDB and notify him about new results.

- Option 2: AutoQA must provide an option how to define which events you are interested in listening to.

- Option 3: AutoQA must provide some interesting events exports (like web syndication feeds) that would exactly suit Brian's needs.

- -> Again not part of resultDB, message bus is best solution.

- Brian needs to be notified about all tests which were scheduled to run in AutoQA but that have not finished successfully (test crashed, AutoQA crashed, operating system crashed, host hardware crashed).

- <<FIXME - how?>>>

- -> Not problem of ResultDB. But we must support some result like CRASHED to indicate the job has not been completed neither it will be.

Fedora tools

- Fedora Update System needs to know what the current status of AutoQA tests is for a particular ENVR.

- Fedora Update Systems asks ResultsDB about a particular ENVR and receives an answer whether the tests were already executed or not and eventually their result.

- ResultsDB front-ends need to display not only results to individual packages, but also overview of results summaries ('Show me all failed tests for the last week').

- Fedora Update System wants to have relevant people notified when the status of AutoQA tests changes for a particular update.

- Option 1 - Fedora Update System sends the notices: AutoQA must support registering a listener. Fedora Update System registers a listener for a particular update and AutoQA notifies it for every change on that update (this is particularly needed for subsequent changes, like UNTESTED → NEEDS_INSPECTION → WAIVED).

- Option 2 - AutoQA sends the notices: AutoQA has hardcoded in the tests whom to send noticies.

- Option 3 - AutoQA sends the notices: Fedora Update System supports querying for the recipients of the notices. AutoQA query Fedora Update System for the recipients and sends them a notice.

- Option 4 - Fedora Notification Bus: All update result changes are sent to the common Notification Bus and anybody can catch those notifications and do anything he wants with it. This is in a far future.

- -> Notification bus.

General public

- Karen is just interested how automated testing works and what are the recent results.

- Karen opens up an AutoQA web front-end and browses through the results.