| Line 63: | Line 63: | ||

== User Experience == | == User Experience == | ||

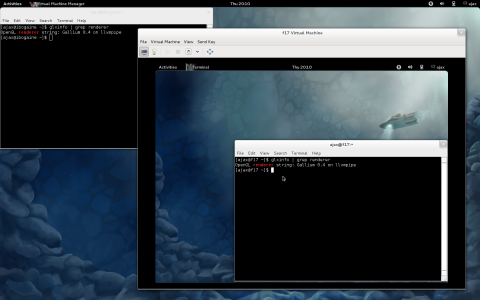

Users will get the regular GNOME 3 experience, not fallback mode, regardless of whether their hardware or driver supports GL or not. Note that software rendering does require sufficient CPU power for a good experience. | Users will get the regular GNOME 3 experience, not fallback mode, regardless of whether their hardware or driver supports GL or not. This includes virtual machines. Note that software rendering does require sufficient CPU power for a good experience. | ||

http://ajax.fedorapeople.org/heck-of-yes.png | [[File:Small-heck-of-yes.png|link=http://ajax.fedorapeople.org/heck-of-yes.png]] | ||

== Dependencies == | == Dependencies == | ||

Revision as of 00:35, 4 November 2011

Software rendering for gnome-shell

Summary

Make gnome-shell work with software-rendering on most hardware

Owner

- Name: Adam Jackson

- Email: ajax@redhat.com

Current status

- Targeted release: Fedora 17

- Last updated: 2011-11-3

- Percentage of completion: 10%

Detailed Description

Modern CPUs are in principle powerful enough to run gnome-shell and a full GNOME 3 desktop with software rendering. There's are plenty of bugs and broken optimizations in the software-rendering stack that make this currently not work as well as it ought to.

Benefit to Fedora

Fedora gets to provide the same experience on more systems, including virtual machines.

Gnome can phase out some components that are only kept alive because they are needed in the fallback mode, which allows more resources to be focused on improving Gnome 3.

Scope

Gnome

gnome-session currently treats llvmpipe as an unsupported driver. This would need to be dropped. In its place we may want to replace the "Forced Fallback Mode" toggle in the System Info control panel with a tristate, to allow users to revert to llvmpipe if their native driver doesn't render correctly.

mutter may want to turn down the bling when not using a hardware driver. Drop-shadows on windows are an obvious easy win since that will eliminate much overdraw in common cases. The background-dim effect when entering the overlay might or might not be worth cutting out, depending on how much of the scene already needs to be updated. The animation timer might want to drop to 30fps to avoid needing to "catch up" by too much at once. And so on.

Kernel

llvmpipe currently does not use any DRM services. Most importantly this means that all image transfers between the X server and the compositor - both acquiring the window images with EXT_texture_from_pixmap, and uploading the new scene with glXSwapBuffers - are copies. Big, big copies. The DRM should provide a mock GEM allocator that is backed purely by system memory (optionally with the ability to bind and unbind such memory to a real driver), so that the software 3D stack can take advantage of the same kinds of zero-copy optimizations as hardware drivers.

Mesa

llvmpipe needs to be ported to the kernel mock GEM services described above.

llvmpipe may want to expose the MESA_copy_sub_buffer extension, since clutter and other toolkits contain a fast path for it that only uploads damaged screen regions instead of performing a full buffer swap. This might not be necessary if EXT_framebuffer_blit works. Currently it appears not to, but that may just disappear upon porting to mock-GEM.

llvmpipe's stride limit, both for texturing and rendering, is currently 4096. This is probably sufficient: most non-native hardware has only two outputs and the effective size limit on DVI is 2048x1152, so you couldn't really go wider if you wanted to. But it may be worth investigating bumping this higher.

The rendering path should be audited to ensure that the software DRI and window system binding are generating as few round-trips as possible.

llvmpipe, the Mesa core, and LLVM itself are all optimization candidates. llvmpipe has so far mostly been tuned for x86, for example, and ARM or PPC may benefit greatly from small changes to make the internal data layout match their vector instruction sets as has been done for SSE2.

X11

Drivers without a native DRM driver should be ported to use mock-GEM for memory management. For almost all such drivers this will just mean using mock-GEM to allocate the shadow framebuffer. Some cases (mostly virt, where shadowfb doesn't win you anything) will want to be able to bind their front buffer directly to a GEM object, to eliminate the memcpy on scene upload.

Since llvmpipe probably wants to keep compatibility with non-mock-GEM X servers, either DRI2 or GLX needs to somehow advertise that the llvmpipe driver loaded in the server is mock-GEM-aware.

How To Test

- . Locate hardware that only supports GNOME 3 in fallback mode with F15 or F16

- . Install the F17 desktop spin

- . Verify that it provides the regular GNOME 3 experience, not fallback mode

User Experience

Users will get the regular GNOME 3 experience, not fallback mode, regardless of whether their hardware or driver supports GL or not. This includes virtual machines. Note that software rendering does require sufficient CPU power for a good experience.

Dependencies

TBD

Contingency Plan

Fallback mode will still be around for a while, and if software rendering does not work out, or only works under certain circumstances, we will adapt the blacklisting mechanism that is currently used for fallback mode to only try the full experience when it has a chance of success, and continue to fall back to fallback mode in problematic cases.

Documentation

TBD

Release Notes

TBD