(Created page with "This document is part of a series on Factory2. Next, let’s take on '''Unnecessary Serialization (#2)'''. Compared to the '''D...") |

m (Ralph moved page User:Ralph/Drafts/Infrastructure/Factory2/Deserialization to Infrastructure/Factory2/Prehistory/Deserialization) |

||

(No difference)

| |||

Latest revision as of 15:21, 29 March 2017

This document is part of a series on Factory2.

Next, let’s take on Unnecessary Serialization (#2). Compared to the Dep Chain (#7) problem in the overview document, this one is a hydra. It has different, otherwise unrelated causes peppered throughout the pipeline, with responsibility located in different teams.

There are two different sides to this, roughly divided by organizational responsibility: artifact production and continuous integration. Ultimately, we want to test our changes as quickly as possible but we cannot test them until we produce testable artifacts. Both internal to Red Hat and in the Fedora Community, ownership over these functions is divided into different teams (releng and QE).

Serial Artifact Production

Signing and Containers

The primary manifestation of the problem is that we cannot build containers until we have a compose. Which means, if you’re trying out a change to a RPM component, you have to commit it, build it, and get it rolled into a compose before it can be included in a container. If it breaks the container you’re already too far along down the process to easily back out your change -- plus you’ve wasted days waiting on other processes.

Part of the problem here is that we need to sign rpms before we can create a container out of them or the containerization will fail. By policy we try to only sign things that we intend to ship. There are semantics associated with signing in our pipeline.

That’s step one (and this work is already in progress) we need to decouple signing from what we have historically called The Compose step so we can create containers more quickly in response to component changes. We’ll use a build key to sign everything that comes out of the build system and then only later sign content with a gold key indicating that we bless it for distribution.

After that, it will be a matter of modifying OSBS to be able to build container both out of a compose (for eventual distribution) as well as out of a koji tag/target’s repo.

It doesn’t stop with containers - that’s just the most eye-opening case. The problem is general. The example of containers kept popping up in discussions, but it really is only one case of an artifact being produced out of a compose. I think the reason for this must be that containers are relatively new to us and we’ve already become accustomed to other kinds of artifacts taking a long time to produce. Containers are of the age of continuous integration. A large section of our internal customers did a double-take when our pipeline couldn’t produce them rapidly. It summoned the spectre of Factory 2

All artifacts from a compose - repositories, live media, install media (eventually OSTrees) - all of these we would like to produce as soon as a component change is introduced that warrants their production. We’re going to break the compose apart into a modular compose.

The Modular Compose

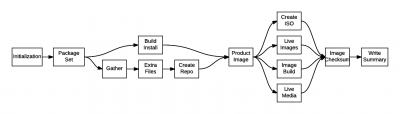

Let’s look first at how the pungi/distill compose works today. Producing a compose is broken up into phases, some of which run in serial and others which run in parallel.

At this point, take a moment to review the modularity architecture document on Constructing a Modular Distribution. Although we won’t get to a fully modular compose until a further step down the Factory 2.0 road, we’ll need to organize our changes here to lay the basis for that work.

See the “Package Set” phase of the current compose process? It is responsible for snapshotting a set of packages from a koji tag. It ensures that, while the rest of the compose phases are running, they will all only draw from the same set of RPMs.

While it is true that inside a single compose process some phases run in parallel, this “Package Set” step acts like a giant lock around the compose as a whole. It is only after some arbitrary number of component changes have piled up in the koji tag that we produce a compose and can test them in composition. It takes the continuous wind right out of our continuous integration sails.

This wasn’t an accident. Understand that, without the “Package Set” phase, the current compose process would be susceptible to a distributed systems race condition. It may be that while a release engineer is building some images, packagers are building new rpms into the pool from the other side of the build environment. Subsequent images are then composed of different rpms. We have a mix!

How bad is this?

- It makes RCM crazy. They’re responsible for producing the composes. If some images succeed but others fail, some are good but others bad, how can they anticipate the difference? Accordingly, we freeze the package set to keep things consistent.

- It makes it difficult to determine if we have fixed critical bugs or not. If images built at slightly different times are built from different pools of rpms, there’s no way to tell if the critical fix in rpm foo-1.2-3 is included in all of the compose artifacts or not. Accordingly, we freeze the package set so we can audit the compose.

Both of these concerns melt away when we start to talk about a continuous compose.

The Continuous Compose

The idea here is to, instead of waiting for releng to run the compose scripts manually or for cron to run them nightly, we trigger subsequent parts of the compose in response to message bus events. The plan is described in the Automating Throughput document. We won’t duplicate that here. Instead, focus for now on the problems that we solved “for free” when our compose process involved locking itself off from the rest of the build system.

To solve both the consistency and auditability problem, we need to be sure that we keep a record of every artifact’s manifest. On its own, this solves the auditability problem.

For consistency’s sake, if we have continuous delivery of compose artifacts, we can expect each of them to be different while changes are being made to the pool of components (a bad thing) but we can assume that we’ll reach eventual consistency. The trick is knowing how to assert consistency. For that, we only need to compare the manifests across our latest set of artifacts.

Continuous Integration

As far as CI goes, we need to be able to produce artifacts more quickly, yes. But the general pattern and architecture by which tests are run and their results recorded is out of our hands. That belongs to the QE organization with whom we’ll cooperate!

There are a handful of tasks executed and recorded by the Errata Tool (the internal gating tool) that fulfill a QE role and have furthermore been the subject of complaint. We have some tools that do static analysis of components.. But they do not run until an advisory is created the Errata Tool. We simply need to decouple their execution from the Errata Tool and schedule it in response to other bus events (like dist-git commits). This is already on the Errata Tool roadmap, and will go a long way to getting quicker feedback for developers.