The so-called "Factory 2.0"

This document is the introduction to a series. Subsequent documents are:

- Modelling Dependencies

- De-serializing the Pipeline

- Automating Throughput

- Flexible Cadence

- Modularity

- Removing Artifact Assumptions

Preface, and misconceptions

Factory 2.0 is a Red Hat funded initiative out of PnT DevOps to make some big changes to the "build-to-release" pipeline, a.k.a the "Factory". One of the most difficult parts of this project has been figuring what Factory 2.0 even is (and what it isn’t). Like with so many things, if you get 5 people in a room and ask them what Factory 2 is, you’ll get 6 different answers. This document will try to clarify and dispel some of the ambiguity.

I've heard people talk about factory 2.0 as a "system" in the way we think of Bodhi or Koji as a system. In some of our problem-statement interviews people talk about how "excited they are for Factory 2.0 to land" and how they can't wait to "submit requests to the system". This is worrisome. Factory 2.0 won't be a single webapp. We're talking about the pipeline as a whole - the sum of all our hosted systems.

Another buzz about town is that factory 2.0 will be a from-scratch rewrite of our pipeline. Factory 2.0 won’t be a rewrite of every tool we run. We’re going to be looking at incremental tweaks that span the pipeline and that line up with a long term vision, informed by best practices and project needs (as best we can figure those two things out).

Specifically, what’s the problem?

With so many people having so many different ideas about what we should do, we set out to try and systematically define it. We spent most of FY17 Q1 gathering input from stakeholders and assembling a list of "problem statements" (a.k.a complaints!). We then posed that list back to our stakeholders in the form of a survey, collected the results, and wound up with the following prioritized list.

- Repetitive human intervention makes the pipeline slow. This is true from the Engineering side (think CDW, manually packaging, building, submitting advisories, etc..) as well as from the releng side (think manual composes, scripts to move things around in the published tree, management of dist-git and koji tags, etc…). Not all but many of these things are being taken up in “Factory 1.0” initiatives (an entire "pillar" dedicated to automation was formed in Red Hat's PnT Devops organization to address this!) However, since Fedora Modularity will only amplify the problems, we need to address it specifically in Factory 2.0 plans (as a supplement to existing efforts, not a replacement).

- Unnecessary serialization makes the pipeline slow. This is true from multiple perspectives as well. Containers can’t be built with rpms until those rpms have become part of a compose. A compose won’t include new rpms until they are part of an advisory. An advisory can’t be created until Bugzilla bugs are ACK’ed. RPMs can’t be tested as a group (project insanity, etc) until an advisory is created. Integration happens too late in the pipeline, which means problems are identified too late in the pipeline.

- The pipeline imposes an often rigid and inflexible cadence on products. Think of the schedules of the standard Fedora releases versus Fedora Atomic Host here. Inflexibility in our tools (with respect to release cadence) encourages teams to invent their own tooling, away from the mainstream pipeline which only exacerbates our continuous integration problems.

- The pipeline makes assumptions about the content being shipped. Think about the kind of work that had to go into getting us to the point where we can ship docker containers -- and even then, hacks and workarounds are required. What kind of challenges will future artifacts pose? If we’re going to be retooling most of our systems, it would behoove us to be forward-thinking in our changes. Make things flexible so we only need to implement a way to build flatpak containers, and voila, they can flow seamlessly through pungi, PDC, Bodhi, and to the mirrors. That’s the dream.

- Products are defined by packages, not “features”. In Red Hat, Product Management just doesn’t want to deal with lists of thousands of components when defining products. In turn, think about how difficult it is for a new person to remix Fedora. Fedora Modularity is the answer here, but we (infrastructure and release engineering) need to be involved from the start. Fedora Modularity is one part devel, one part infra. No less!

- There’s no easy way to trace deps from upstream to product (through all intermediaries). Think of the glibc rebuild of the world and all the manual work that entailed. We have some ways to model deps of docker containers (OSBS rebuilds), and we have some ways to model deps of rpms, and productmd manifests model deps between rpms and image artifacts, but we don’t have an easy way (a queryable service) to model the entire dep chain. Therefore, we can’t build general tooling to orchestrate rebuilds. When considering a change to a product, we can’t easily identify which engineering teams might be affected. Conversely, when considering a change to a component, we can’t easily identify which products might be affected. If we had this visibility, we could associate a potential change with a cost, and assess whether the benefit of that change outweighs the cost. We could scale this all the way up to the cost for productizing, releasing, and maintaining a new product.

Analysis and Order of Execution

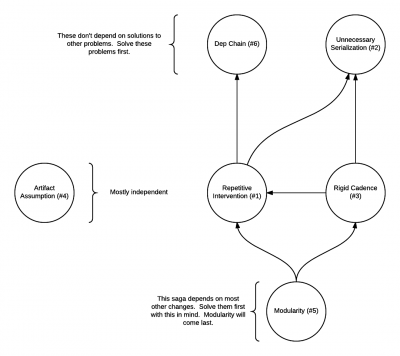

First, let’s rule out the Artifact Assumption (#4) problem. It is unlike our other problem statements in two ways. First, it is not quantitatively measurable - it is not a deliverable. Being able to say that we solved that problem requires a qualitative evaluation of the code in our pipeline. It is really about fighting technical debt. Second, none of the solutions to other problem statements require it and it requires none of the solutions to other problem statements. It is independent.

Let’s now consider the remaining five problem statements: Repetitive Intervention (#1), Unnecessary Serialization (#2), Rigid Cadence (#3), Dep Chain (#6), and Modularity (#5). In what order do we tackle them? What dependencies exist between their solutions?

The Dep Chain (#6) problem is mostly independent. As described in the original statement, we have dependency information scattered throughout our systems, but it is heterogenous, inconsistent, and hard to find. We already have a plan for how to integrate this in the Fedora Modularity efforts (video) via PDC.

The Unnecessary Serialization (#2) problem can be solved mostly independently. It is both deep and broad (involving nontrivial changes in many different tools) and is therefore difficult. It is mostly about moving the gating logic peppered throughout all our tools down the chain to Errata Tool/Bodhi and removing any restrictions in tools that presume some preliminary gating.

The Repetitive Intervention (#1) problem, on the other hand, has two dependencies -- we require repetitive human intervention from Engineering and RCM for two reasons. First, our tooling does not have general knowledge about the interrelations of the content being shipped (i.e., the Dep Chain (#6)). Second, our tooling does not have general knowledge about the interrelations of our tooling doing the shipping (i.e., Unnecessary Serialization (#2)). Humans have to be involved to 1) do the right thing 2) at the right time. Solving this problem therefore requires solving those other two problems first.

Next, why do we have a Rigid Cadence (#3) problem? There is an intrinsic, implicit cadence to the pipeline defined tautologically as: the pipeline is as fast as it is. Since we want everything to flow through the same pipeline for maintainability, auditability, reproducibility, etc.. every product’s cadence can therefore only be as fast as the whole pipeline is slow. Improving general pipeline throughput means we can look at varying cadence. Improving general pipeline throughput is a prerequisite for flexible cadences. Improving general pipeline throughput is exactly what the Unnecessary Serialization (#2) and Repetitive Intervention (#1) problems are about.

Lastly, Modularity (#5). What a beast. It challenges deeply held assumptions in our pipeline about lifecycle (and Rigid Cadence (#3)). The combinatorial explosion of builds, buildroots, tags, targets, channels, layered products, etc.. all mean that life is no longer possible if Repetitive Intervention (#1) is the norm. Like all roads lead to Rome, all Factory 2.0 problems lead to Modularity (#5).

A Twist!

The above analysis of requirements between problems and their solutions is helpful, but in real life we have a twist! We’ve identified here that Modularity (#5) should come last among problems that we try to solve. However, as I’m writing this we have an elite cross-functional scrum team already at work in Fedora trying to make modularity a reality. It is happening first!

Not all is lost. This document is in part a product of devops partnership with that team, discovering the problem relationships as we try to solve Modularity. For instance, the Dep Chain (#6) and Repetitive Intervention (#1) problems have already been identified there and have subteams actively working on them.

To not shoot ourselves in the foot

The modularity working group is fond of quoting: "The modularity effort will give us lots of power, enough to shoot ourselves in the foot." The same goes for us here - we could easily increase unpredictable demands on releng's time if we don't make our own organizations (PnT DevOps and Fedora Infra) into first-class stakeholders in the design. We can’t let ourselves hack our way through all this in order to deliver on time, or we (Pnt DevOps and Fedora Infra) will suffer more technical debt than we do today.