| Line 23: | Line 23: | ||

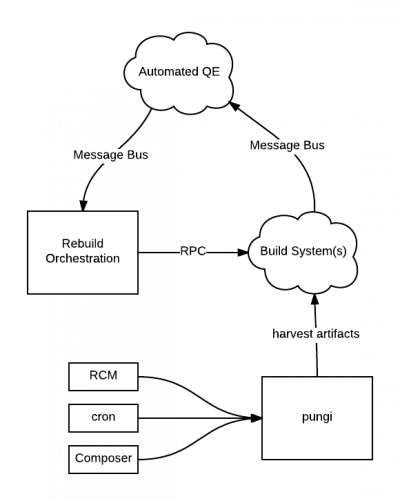

Pungi currently works by (at the request of cron or a releng admin) scheduling a number of tasks in koji. Deps are resolved, repos are created, and images (live, install, vagrant, etc..) are created out of those repos. This takes a number of hours to complete and when done, the resulting artifacts are assembled in a directory called The Compose. Some CI and manual QA work is done to validate the compose for final releases, and it is rsynced to the mirrors for distribution. | Pungi currently works by (at the request of cron or a releng admin) scheduling a number of tasks in koji. Deps are resolved, repos are created, and images (live, install, vagrant, etc..) are created out of those repos. This takes a number of hours to complete and when done, the resulting artifacts are assembled in a directory called The Compose. Some CI and manual QA work is done to validate the compose for final releases, and it is rsynced to the mirrors for distribution. | ||

[[File:Automation-pungi.png|center|400px]] | |||

With the introduction of modules, we’re rethinking The Compose. Without automation, we’ll have an explosion in the amount of time taken to build all of the repos for all of those combinations which is why we’re going to break out a good deal of that work into the orchestrator, which will pre-build the parts the constitute a compose, before we ask for them. | With the introduction of modules, we’re rethinking The Compose. Without automation, we’ll have an explosion in the amount of time taken to build all of the repos for all of those combinations which is why we’re going to break out a good deal of that work into the orchestrator, which will pre-build the parts the constitute a compose, before we ask for them. | ||

Revision as of 18:01, 24 June 2016

This document is part of a series on Factory2.

Automating Throughput

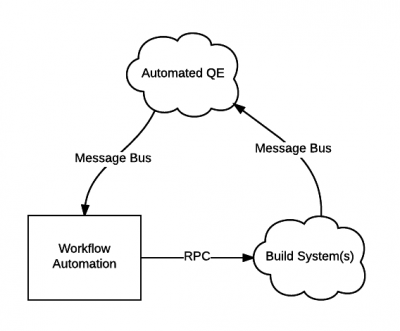

Looking at the problem of Repetitive Intervention (#1), there are a lot of sub-problems we could consider. However, as mentioned in the overview document we’re going to rule out most of the projects currently being worked on by the PnT DevOps Automation pillar. That work is very important, but we’re going to say it constitutes “Factory 1.0” work.

For Factory 2.0 purposes, we’ll limit ourselves to thinking about Modularity (#5) and the new burdens it will create for pipeline users. With Modularity, we’re allowing the creation of combinations of components with independent lifecycles. There’s the possibility of a combinatorial explosion in there that we’ll need to contain. We’ll do that in part by vigorously limiting the number of supported modules with policy (which is outside the scope of Factory 2.0), and by providing infrastructure automation to reduce the amount of manual work required (which happens to be in scope for Factory 2.0).

Recall that the work here on Repetitive Intervention (#1) depends on our solution to the dep graph problem, Dep Chain (#6), and our solution to the unnecessary serialization problem, Unnecessary Serialization (#2).

- Rebuild automation of modules and artifacts cannot proceed if we don’t have access to a graph of our dependencies.

- Rebuild automation of artifacts cannot proceed if we haven’t deserialized some of the interactions in the middle of the pipeline. We can’t automatically rebuild containers built from modules while that container build depends erroneously on a full Compose.

For Engineering

We’re reluctant to build a workflow engine directly into koji itself. It makes more sense to relegate koji to building and storing metadata about artifacts and to instead devise a separate service dedicated to scheduling and orchestrating those builds.

When a component changes, the orchestrator will be responsible for asking what depends on that component (by querying PDC), and then scheduling rebuilds of those dependant modules directly. Once those module rebuilds have completed and have been validated by CI, the orchestrator will be triggered again to schedule rebuilds of a subsequent tier of dependencies. This cycle will repeat until the tree of dependencies is fully rebuilt. In the event that a rebuild fails, or if CI validation fails, maintainers will be notified in the usual ways. A module maintainer could then respond by manually fixing their module and scheduling another module build, at which point the trio of systems would pick up where they left off and would complete the rebuild of subsequent tiers (stacks and artifacts).

For releng

Pungi currently works by (at the request of cron or a releng admin) scheduling a number of tasks in koji. Deps are resolved, repos are created, and images (live, install, vagrant, etc..) are created out of those repos. This takes a number of hours to complete and when done, the resulting artifacts are assembled in a directory called The Compose. Some CI and manual QA work is done to validate the compose for final releases, and it is rsynced to the mirrors for distribution.

With the introduction of modules, we’re rethinking The Compose. Without automation, we’ll have an explosion in the amount of time taken to build all of the repos for all of those combinations which is why we’re going to break out a good deal of that work into the orchestrator, which will pre-build the parts the constitute a compose, before we ask for them.

Pungi’s job then will be reduced to harvesting those pre-built artifacts. In the event that those artifacts are not available in koji, pungi will of course have to schedule new builds for them before proceeding.

For QE

Consider the “Automated QE” cloud in the above diagrams. At its core, we expect that work to be owned by the fedora-qa team. The tools promoted to manage that are taskotron and resultsdb in Fedora, but there is a different set used internally. We'll have to do some work to make sure that our tools don't go split-brain here while developing for one environment or the other.

We’re primarily interested in results. Did they pass? Did they fail? Hopefully, we can use resultsdb as a mediating layer between our orchestration system and the CI system. We'll use resultsdb directly in Fedora. For internal PnT Devops use, we'll set up an adapter service to shuttle results over to resultsdb as they become available in the internal CI system.